By Lucas | September 22, 2015

Not too long ago, I did my first post on Apache Spark, a Spark dataframes tutorial. I’ve continued to experiment with Spark since taking my first tentative steps with it just a few months ago. One of the challenges with Spark is that it has a reputation for being difficult to deploy at scale. Stepping in to try to solve that problem is Databricks. Databricks offers the ability for corporations to deploy an optimized Spark via the cloud with some very nice extra bells and whistles.

I took advantage of the Databricks 1-month trial to see how I liked their product, and I came away impressed in my brief time with it. Databricks was founded by the team that created Spark and contributed 75% of the code base to Spark in the last year, according to their website. Consequently, my primary hope was that it would be simple to get up and running with Spark at peak efficiency against a Hadoop cluster on Databricks, so that I could spend my time manipulating data and not fiddling with Spark configuration.

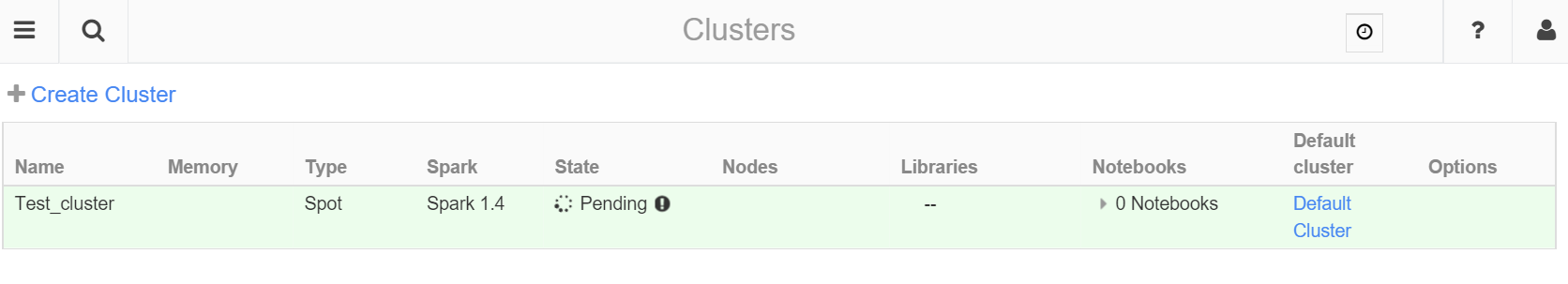

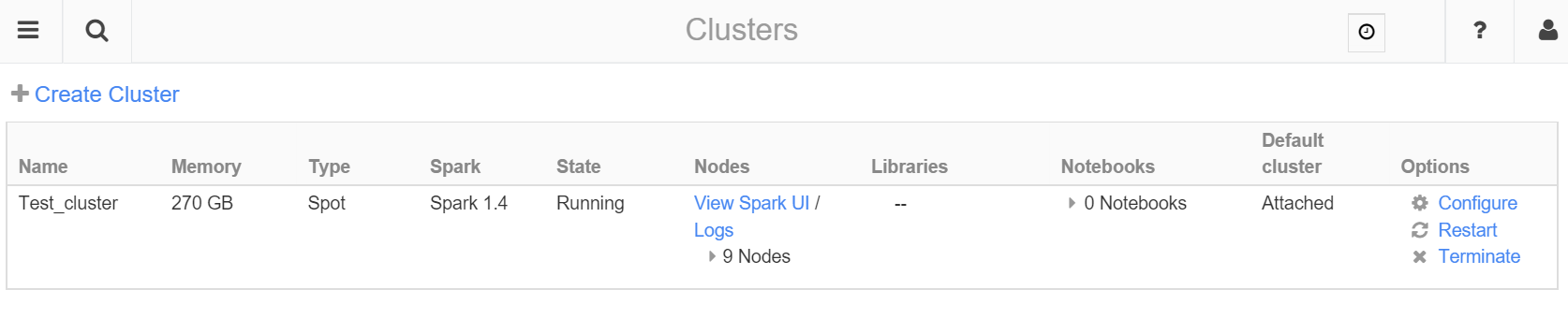

I certainly found that to be the case. When signing up for a Databricks account, I provided access to my Amazon Web Services (AWS) account. This gives Databricks the ability to have Spark access data in any S3 buckets I have created. After logging into Databricks, it’s a matter of a few clicks to have Databricks create a Hadoop cluster for Spark to work with. This gets billed to your AWS account via EC2. If you are doing short term work that can risk being interrupted, you can use “spot instances” to keep the costs down. I was able to work on an 9 node cluster with 30 GB of memory and 4 cores per node for a couple of hours for about $1, which is the cost efficient magic of cloud computing, something I’m still pretty new to.

It took about 10 minutes for my cluster to launch. Once your cluster is launched, its status in the Databricks console changes from “Pending” to “Running.” At this point, you have can restart or terminate the cluster from the console at any time or head into the notebook to do some analysis.

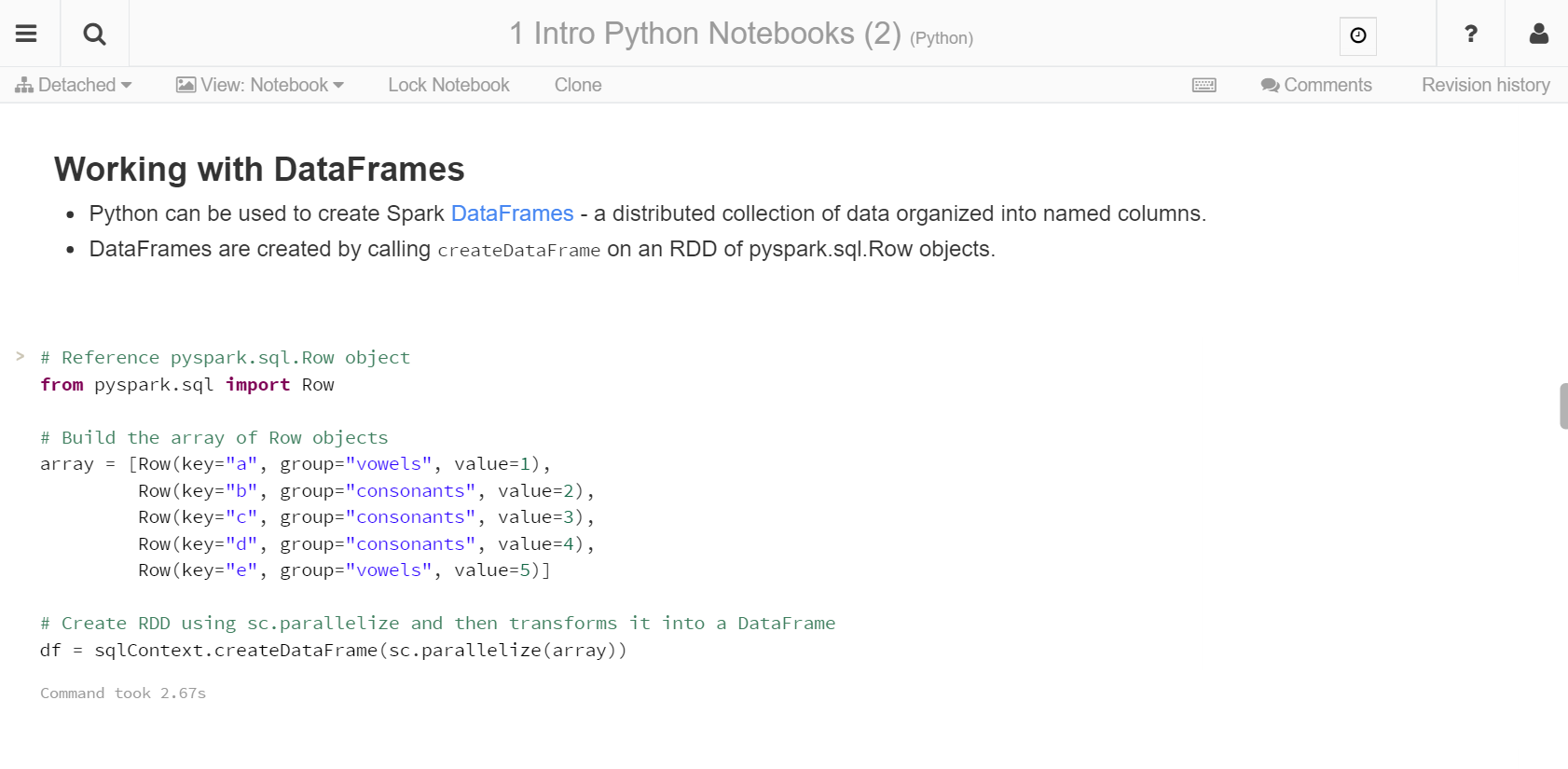

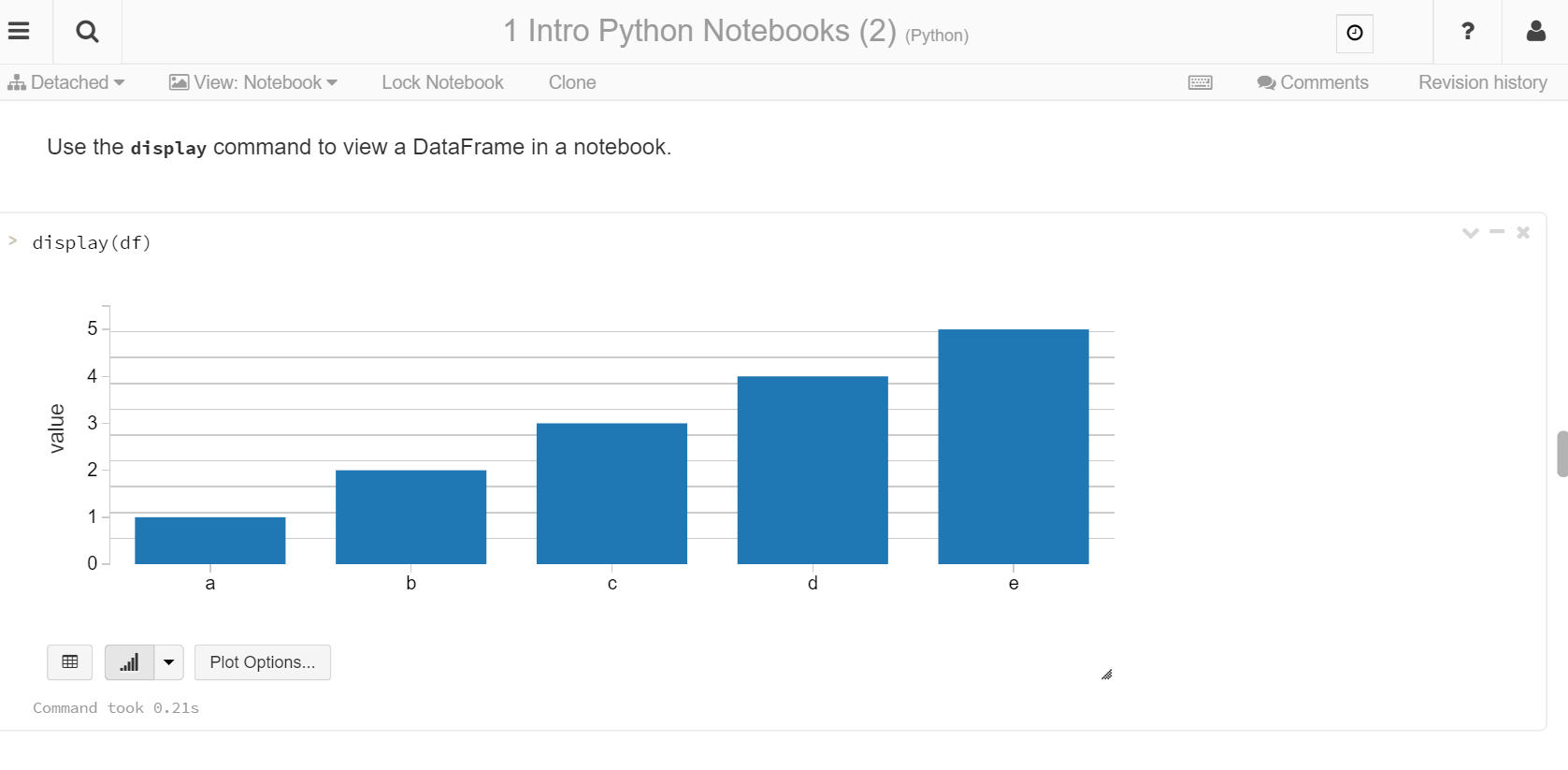

Databricks does offer its own flavor of scientific computing notebooks for your Spark code. The only notebooks I’ve used prior to working with Databricks notebooks are [Jupyter notebooks][4], primarily for Python coding. The Databricks notebooks have a sleeker appearance by comparison, and just feel a little more refined. I didn’t love the method for moving cells up and down (something I have to do often in notebooks), which required me to open and navigate a menu in the corner of the cell I wanted to move. On the other hand, being able to easily save the output of any cell to a CSV is really nice.

Since I didn’t have any S3 buckets readily full of data of the size that you would normally explore with Spark, I tried a few of the data sets that Databricks makes available for practicing with Spark. Some of these are pretty small (many of the data sets included with R are on there), but there are several that are quite large. I did some simple munging with a history of airline flight data, which had close to 1 billion rows. While I didn’t build any sophisticated models using MLlib, the work I did have Spark do–including building a dataframe of the data set, persisting the data in memory with the .cache() method building, doing queries and frequency counts on subsets of the data–they all executed flawlessly and quickly. It took a few minutes to cache the data and seconds to run the queries after that.

There are many other features that Databricks offers that I didn’t even get much into. You can build visualizations, and in fact, they offer integration with third party apps like Tableau and Qlik

I am quite impressed with Databricks and really enjoyed taking it for a spin, but for now I won’t be continuing beyond the trial. Currently, their plans start at about $250 a month, which is completely reasonable by enterprise standards, but too pricey for an individual like myself looking to increase my knowledge of Spark and do hobby projects in my free time. I think Databricks has created an interesting situation because they have created an incredibly powerful tool for businesses to solve big data problems. However, in the process of creating a BI tool, they have made what could also be a very powerful platform for beginners learning Spark and individuals looking to do research projects. Making Spark so easy to get started with could broaden its audience even further, because several individuals I have spoken with have talked about the challenges of getting over the initial difficulties of setup and configuration. Having communicated via email with some of the Databricks team, they have said they are considering the possibility of a more affordable, “developer” tier, for individual users with less ambitious Spark goals. Hopefully, those plans will materialize to make Databricks available to an even wider audience.